介绍

Prometheus Operator

Prometheus Operator 使用 Kubernetes自定义资源来简化 Prometheus、Alertmanager 和相关监控组件的部署和配置,如:

- Prometheus:定义部署的 prometheus server 实例;

- ServiceMonitor:以声明式指定如何监控 kubernetes 服务组,Operator根据配置对象状态自动生成 prometheus 抓取配置;

- PrometheusRule:定义一组 prometheus 警报或规则,Operator根据定义转换为 prometheus 使用;

- Alertmanager:定义部署的alert实例;

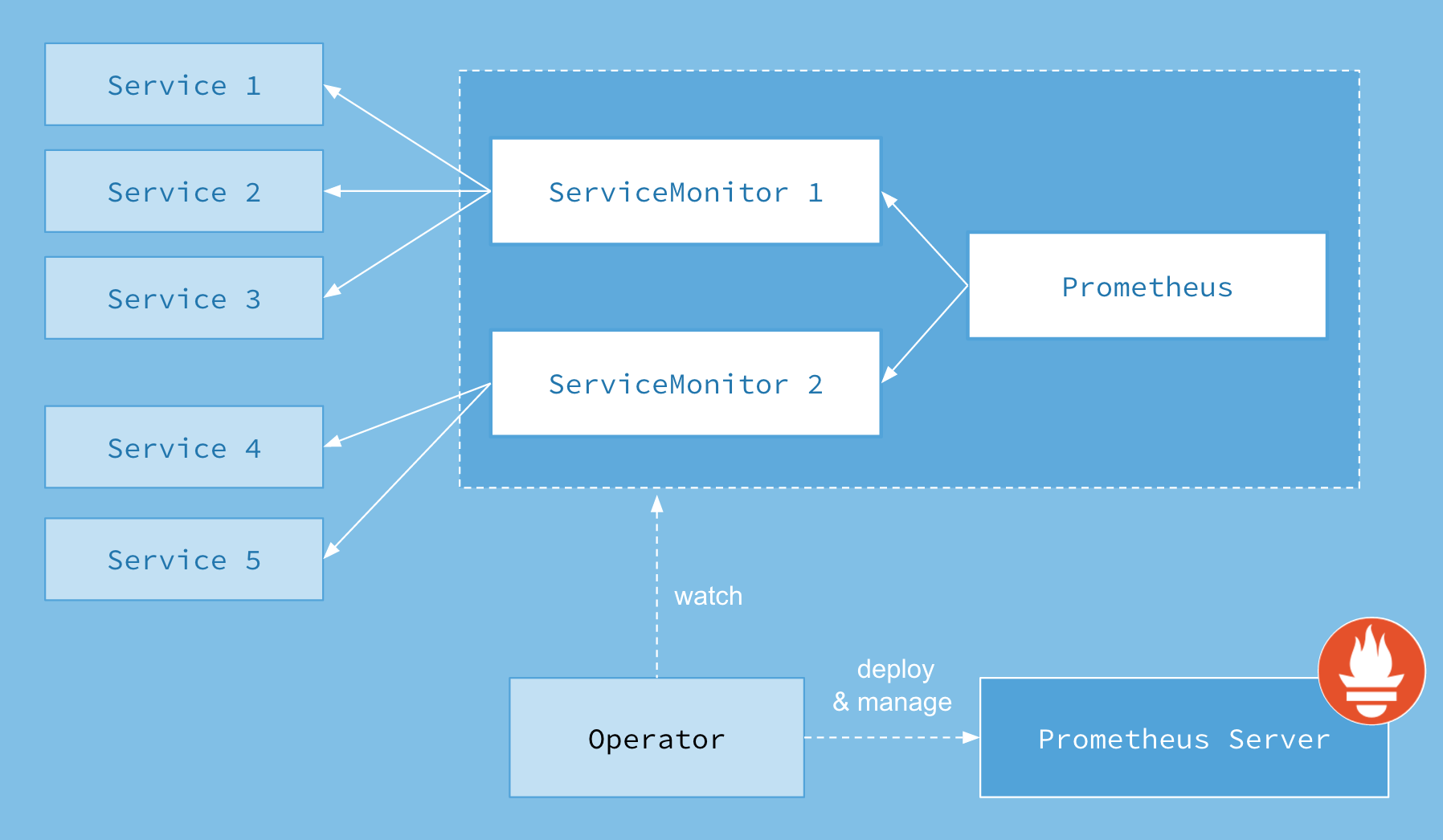

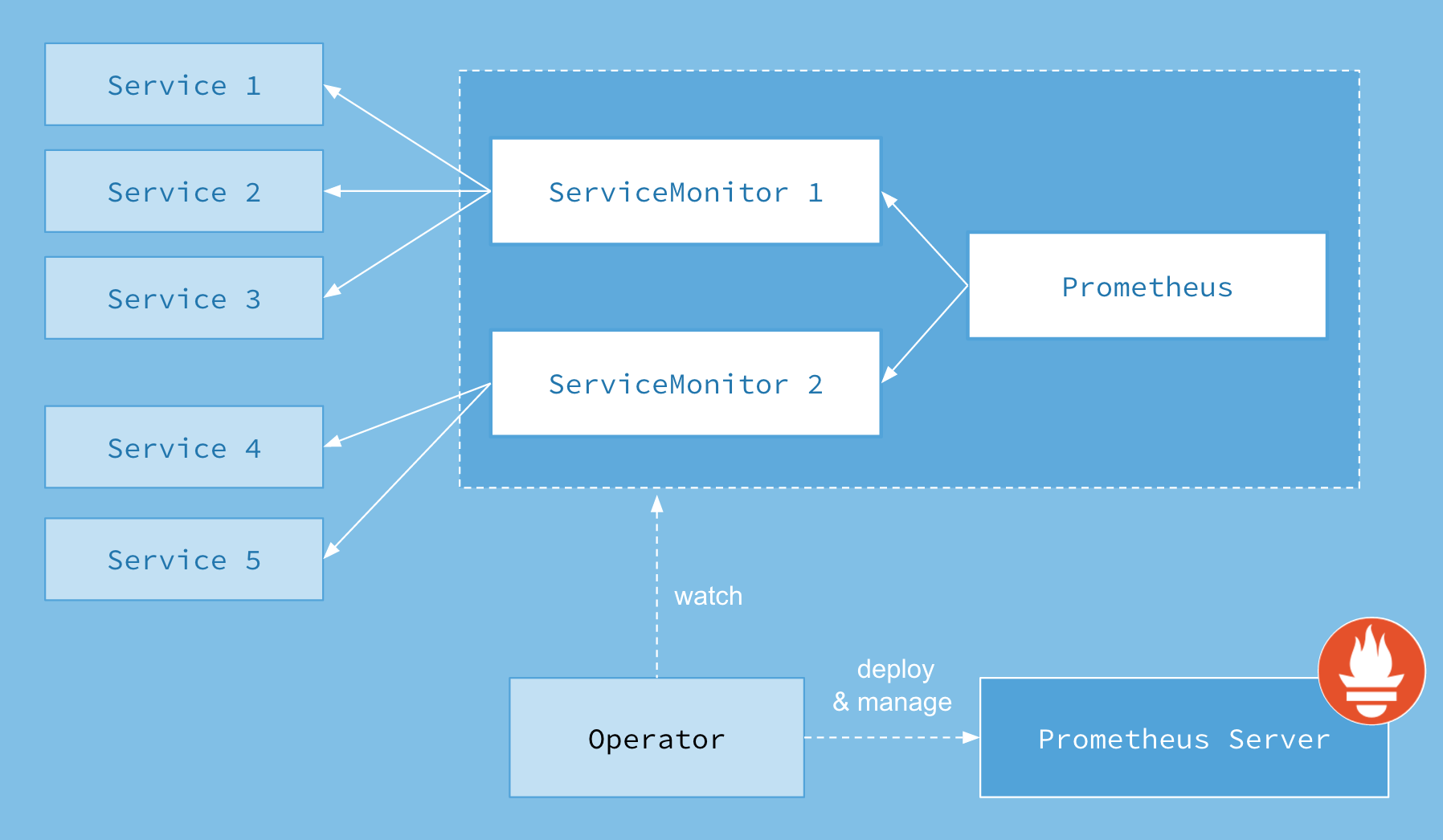

如下是Prometheus Operator架构图

Prometheus Operator

kube-prometheus

kube-prometheus 提供了基于 Prometheus 和 Prometheus Operator 的完整集群监控堆栈的示例配置。这包括部署多个 Prometheus 和 Alertmanager 实例、指标导出器(例如用于收集节点指标的 node_exporter)、将 Prometheus 链接到各种指标端点的抓取目标配置,以及用于通知集群中潜在问题的示例警报规则。

k8s-kube-prometheus版本支持

kube-rometheus

部署

kube-prometheus部署

环境

k8s: v1.22.17

kube-prometheus: release-0.10

获取相应版本文件,建议将文件分类处理下

1

2

3

| cd kube-prometheus-0.10.0/manifests

ls

alertmanager blackbox grafana kubernetesControlPlane kubeStateMetrics node prometheus prometheusAdapter prometheusOperator setup

|

修改prometheus存储时间,挂载持久化存储-prometheus-prometheus.yaml

1

2

3

4

5

6

7

8

9

| spec:

retention: 100d

storage:

volumeClaimTemplate:

spec:

storageClassName: nfs

resources:

requests:

storage: 100Gi

|

添加grafana持久化存储-grafana-deployment.yaml

1

2

3

4

5

| # - emptyDir: {}

# name: grafana-storage

- name: grafana-storage

persistentVolumeClaim:

claimName: grafana-data

|

部署

1

2

| kubectl apply -f ./setup

略

|

添加 Proxy Controller Etcd Scheduler 监控

kube-proxy

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

| apiVersion: v1

kind: Service

metadata:

namespace: kube-system

name: kube-proxy

labels:

k8s-app: kube-proxy

spec:

selector:

k8s-app: kube-proxy

type: ClusterIP

clusterIP: None

ports:

- name: http-metrics

port: 10249

targetPort: 10249

protocol: TCP

---

apiVersion: v1

kind: Endpoints

metadata:

namespace: kube-system

labels:

k8s-app: kube-proxy

name: kube-proxy

subsets:

- addresses:

- ip: 192.168.2.50

ports:

- name: http-metrics

port: 10249

protocol: TCP

---

apiVersion: monitoring.coreos.com/v1

kind: ServiceMonitor

metadata:

labels:

app.kubernetes.io/name: kube-proxy

name: kube-proxy

namespace: monitoring

spec:

endpoints:

- interval: 30s

port: http-metrics

jobLabel: k8s-app

namespaceSelector:

matchNames:

- kube-system

selector:

matchLabels:

k8s-app: kube-proxy

|

查看是否创建成功

1

2

3

4

5

6

7

8

9

| [root@master-1 kube]# kubectl get svc kube-proxy -n kube-system

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kube-proxy ClusterIP None <none> 10249/TCP 58s

[root@master-1 kube]# kubectl get endpoints kube-proxy -n kube-system

NAME ENDPOINTS AGE

kube-proxy 192.168.2.50:10249 73s

[root@master-1 kube]# kubectl get servicemonitor kube-proxy -n monitoring

NAME AGE

kube-proxy 94s

|

修改kube-proxy

1

2

| kubectl edit cm -n kube-system kube-proxy

metricsBindAddress: 0.0.0.0:10249

|

重启kube-proxy

1

| kubectl rollout restart daemonset kube-proxy -n kube-system

|

kube-controller-manager

修改kube-controller-manager监听地址–/etc/kubernetes/manifests/kube-controller-manager.yaml

1

| - --bind-address=0.0.0.0

|

创建svc endpoints

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

| apiVersion: v1

kind: Service

metadata:

namespace: kube-system

name: kube-controller-manager

labels:

component: kube-controller-manager

spec:

selector:

component: kube-controller-manager

ports:

- name: https-metrics

port: 10257

targetPort: 10257

protocol: TCP

---

apiVersion: v1

kind: Endpoints

metadata:

namespace: kube-system

labels:

component: kube-controller-manager

name: kube-controller-manager

subsets:

- addresses:

- ip: 192.168.2.50

ports:

- name: https-metrics

port: 10257

protocol: TCP

|

查看

1

2

3

4

5

6

| [root@master-1 kube]# kubectl get svc kube-controller-manager -n kube-system

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kube-controller-manager ClusterIP 10.105.56.28 <none> 10257/TCP 23s

[root@master-1 kube]# kubectl get endpoints kube-controller-manager -n kube-system

NAME ENDPOINTS AGE

kube-controller-manager 192.168.2.50:10257 38s

|

查看serviceMonitor定义

1

2

3

4

5

| kubectl get servicemonitor -n monitoring kube-controller-manager -oyaml

selector:

matchLabels:

app.kubernetes.io/name: kube-controller-manager

|

查看kube-controller-manager标签

1

2

3

| [root@master-1 kube]# kubectl get po kube-controller-manager-master-1 -n kube-system --show-labels

NAME READY STATUS RESTARTS AGE LABELS

kube-controller-manager-master-1 1/1 Running 1 (21h ago) 21h component=kube-controller-manager,tier=control-plane

|

修改serviceMonitor

1

2

3

4

| kubectl edit servicemonitor -n monitoring kube-controller-manager

selector:

matchLabels:

component: kube-controller-manager

|

kube-scheduler

修改kube-scheduler监听地址–/etc/kubernetes/manifests/kube-scheduler.yaml

1

| - --bind-address=0.0.0.0

|

添加svc endpoints

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

| apiVersion: v1

kind: Service

metadata:

namespace: kube-system

name: kube-scheduler

labels:

component: kube-scheduler

spec:

selector:

component: kube-scheduler

ports:

- name: https-metrics

port: 10259

targetPort: 10259

protocol: TCP

---

apiVersion: v1

kind: Endpoints

metadata:

namespace: kube-system

labels:

component: kube-scheduler

name: kube-scheduler

subsets:

- addresses:

- ip: 192.168.2.50

ports:

- name: https-metrics

port: 10259

protocol: TCP

|

查看

1

2

3

4

5

6

| [root@master-1 kube]# kubectl get endpoints kube-scheduler -n kube-system

NAME ENDPOINTS AGE

kube-scheduler 192.168.2.50:10259 20s

[root@master-1 kube]# kubectl get svc kube-scheduler -n kube-system

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kube-scheduler ClusterIP 10.99.74.73 <none> 10259/TCP 26s

|

修改serviceMonitor抓取标签

1

2

3

4

| kubectl edit servicemonitor -n monitoring kube-scheduler

selector:

matchLabels:

component: kube-scheduler

|

etcd

创建secret

1

2

3

4

5

6

| more /etc/kubernetes/manifests/kube-apiserver.yaml |grep etcd

- --etcd-cafile=/etc/kubernetes/pki/etcd/ca.crt

- --etcd-certfile=/etc/kubernetes/pki/apiserver-etcd-client.crt

- --etcd-keyfile=/etc/kubernetes/pki/apiserver-etcd-client.key

- --etcd-servers=https://127.0.0.1:2379

kubectl create secret generic etcd-ssl --from-file=/etc/kubernetes/pki/etcd/ca.crt --from-file=/etc/kubernetes/pki/apiserver-etcd-client.crt --from-file=/etc/kubernetes/pki/apiserver-etcd-client.key -n monitoring

|

为prometheus挂载secret

1

2

3

| spec:

secrets:

- etcd-ssl

|

创建配置

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

| apiVersion: monitoring.coreos.com/v1

kind: ServiceMonitor

metadata:

labels:

k8s-app: etcd-k8s

name: etcd-k8s

namespace: monitoring

spec:

endpoints:

- interval: 30s

port: port

scheme: https

tlsConfig:

caFile: /etc/prometheus/secrets/etcd-ssl/ca.crt

certFile: /etc/prometheus/secrets/etcd-ssl/apiserver-etcd-client.crt

keyFile: /etc/prometheus/secrets/etcd-ssl/apiserver-etcd-client.key

insecureSkipVerify: true

jobLabel: k8s-app

namespaceSelector:

matchNames:

- kube-system

selector:

matchLabels:

k8s-app: etcd

---

apiVersion: v1

kind: Service

metadata:

namespace: kube-system

name: etcd-k8s

labels:

k8s-app: etcd

spec:

type: ClusterIP

clusterIP: None

ports:

- name: port

port: 2379

protocol: TCP

---

apiVersion: v1

kind: Endpoints

metadata:

namespace: kube-system

labels:

k8s-app: etcd

name: etcd-k8s

subsets:

- addresses:

- ip: 192.168.2.50

nodeName: node1

ports:

- name: port

port: 2379

protocol: TCP

|

添加自定义配置

开启additionalScrapeConfigs

1

2

3

4

| spec:

additionalScrapeConfigs:

name: prometheus-additional-configs

key: prometheus-configs.yaml

|

prometheus-configs.yaml

1

2

3

| - job_name: additional-configs

static_configs:

- targets: ['192.168.2.201:9100']

|

创建

1

| kubectl create secret generic prometheus-additional-configs --from-file=prometheus-configs.yaml -n monitoring

|

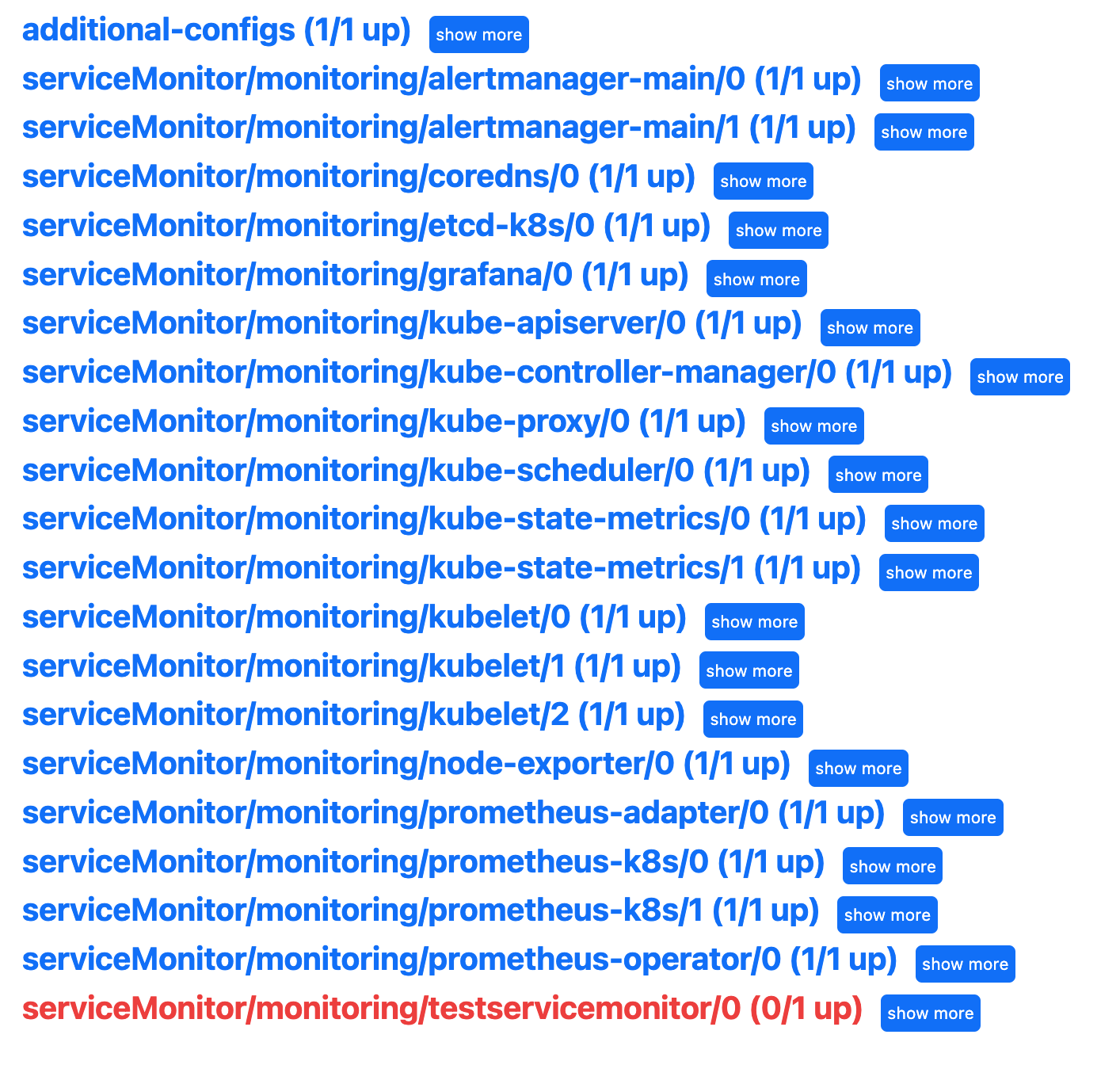

serviceMontor

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

| apiVersion: monitoring.coreos.com/v1

kind: ServiceMonitor

metadata:

labels:

app: test

name: testservicemonitor

namespace: monitoring

spec:

endpoints:

- interval: 15s

port: https

namespaceSelector:

any: true

selector:

matchLabels:

app: .*

|

报错信息-权限不够

1

| ts=2023-10-29T09:08:06.295Z caller=klog.go:116 level=error component=k8s_client_runtime func=ErrorDepth msg="pkg/mod/k8s.io/client-go@v0.22.4/tools/cache/reflector.go:167: Failed to watch *v1.Endpoints: failed to list *v1.Endpoints: endpoints is forbidden: User \"system:serviceaccount:monitoring:prometheus-k8s\" cannot list resource \"endpoints\" in API group \"\" at the cluster scope"

|

prometheus-k8s用户绑定到了prometheus-k8s 的 clusterrole,修改clusterrole prometheus-k8s相应权限

1

2

3

4

5

6

7

8

9

10

11

| kubectl edit clusterrole prometheus-k8s

- apiGroups:

- ""

resources:

- services

- endpoints

- pods

verbs:

- get

- list

- watch

|

配置PrometheusRule

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

| apiVersion: monitoring.coreos.com/v1

kind: PrometheusRule

metadata:

labels:

prometheus: k8s

role: alert-rules

name: k8s-rules

namespace: monitoring

spec:

groups:

- name: Test-Rule

rules:

- alert: "服务器不在线"

annotations:

description: 机器{{ $labels.instance }} 不在线

summary: 机器{{ $labels.instance }} 不在线 {{ printf "%.2f" $value }}

expr: |

(

up{job=~"node-exporter"} == 0

)

for: 1m

labels:

severity: p1

|

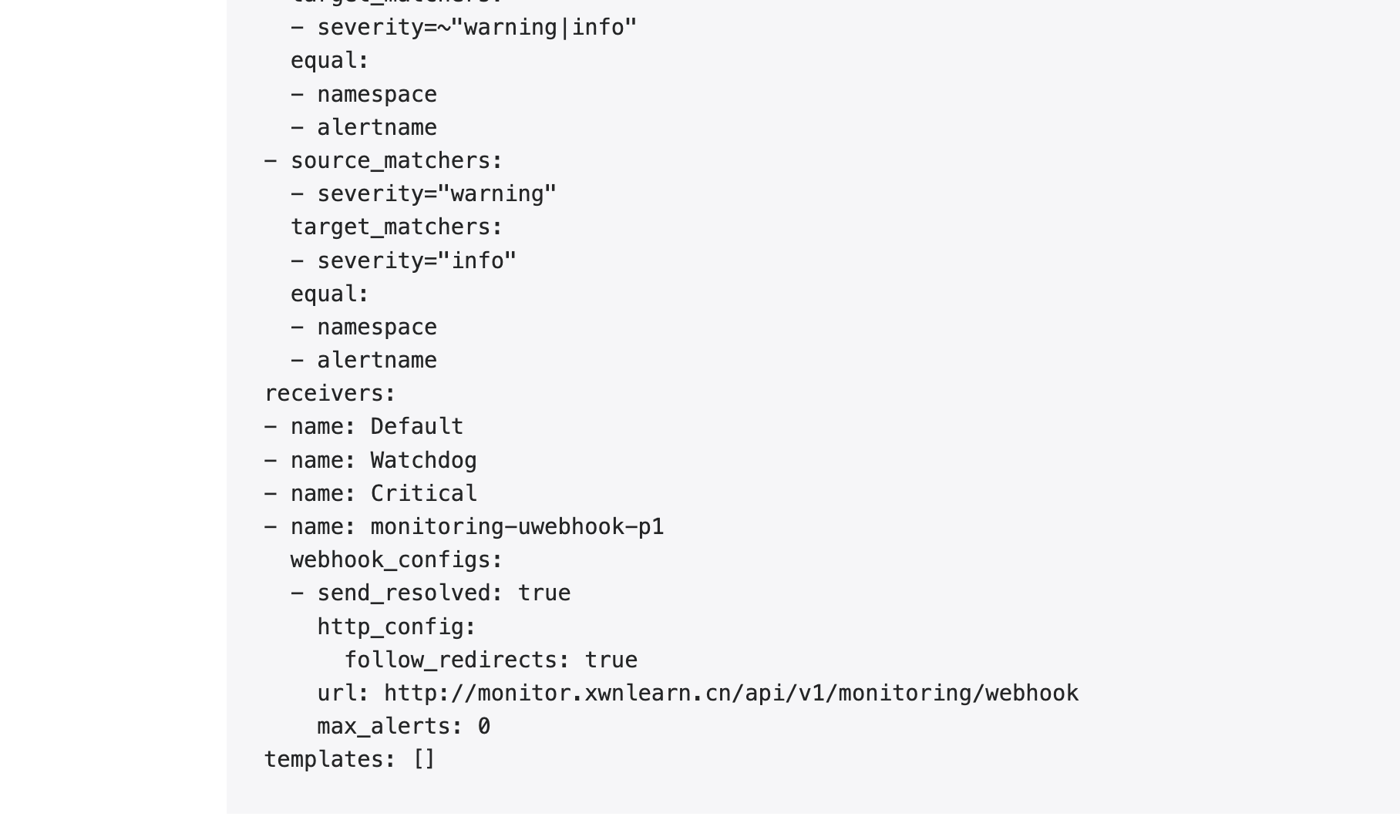

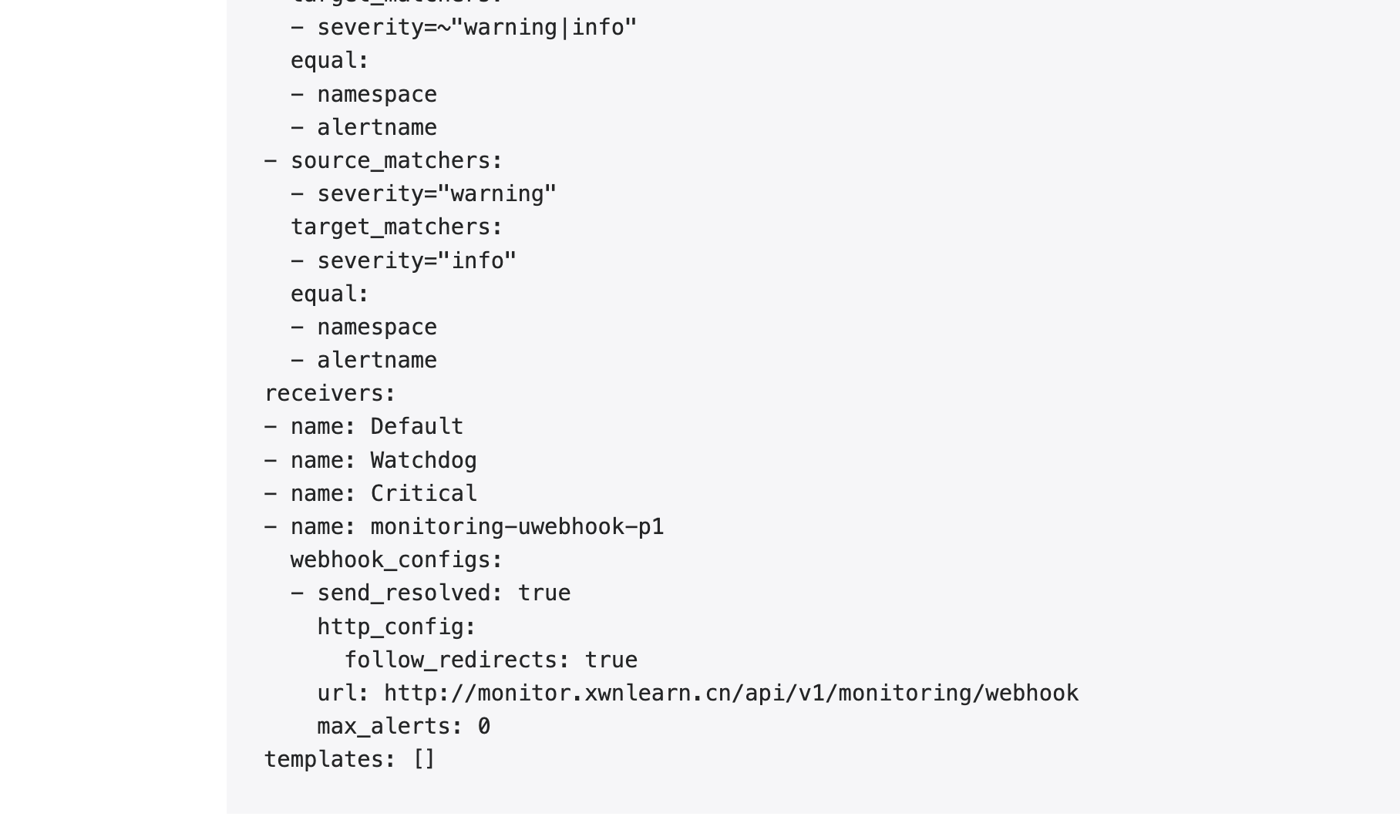

配置alert

配置alertmanager-alertmanager.yaml 添加AlertmanagerConfig 的标签

1

2

3

| alertmanagerConfigSelector: # 匹配 AlertmanagerConfig 的标签

matchLabels:

alertmanagerConfig: example

|

AlertmanagerConfig.yaml

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

| apiVersion: monitoring.coreos.com/v1alpha1

kind: AlertmanagerConfig

metadata:

name: uwebhook

namespace: monitoring

labels:

alertmanagerConfig: example

spec:

receivers:

- name: p1

webhookConfigs:

- url: http://monitor.xwnlearn.cn/api/v1/monitoring/webhook

sendResolved: true

route:

groupBy: ["namespace"]

groupWait: 30s

groupInterval: 5m

repeatInterval: 12h

receiver: p1

routes:

- receiver: p1

match:

severity: p1

|

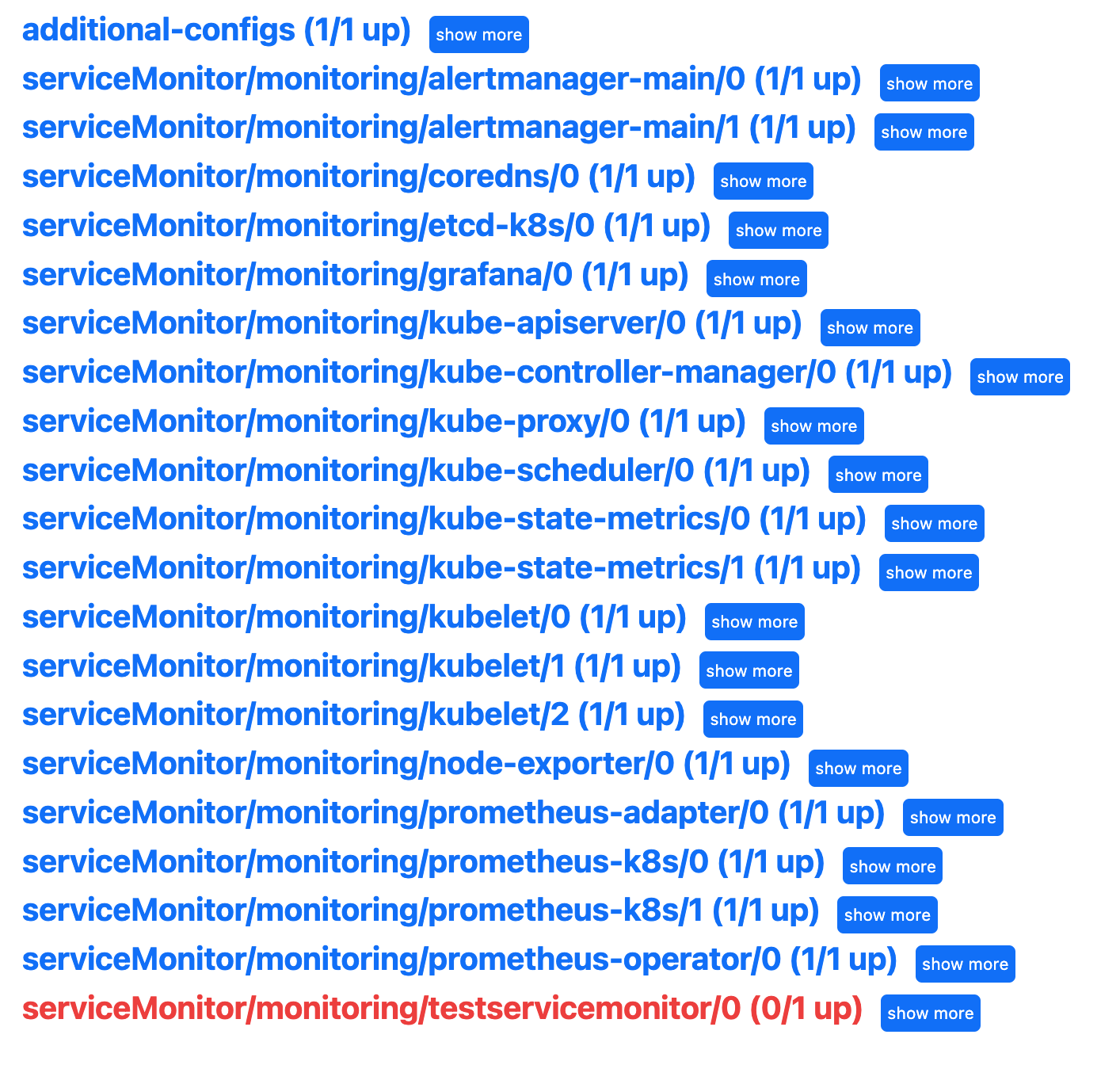

查看相关信息

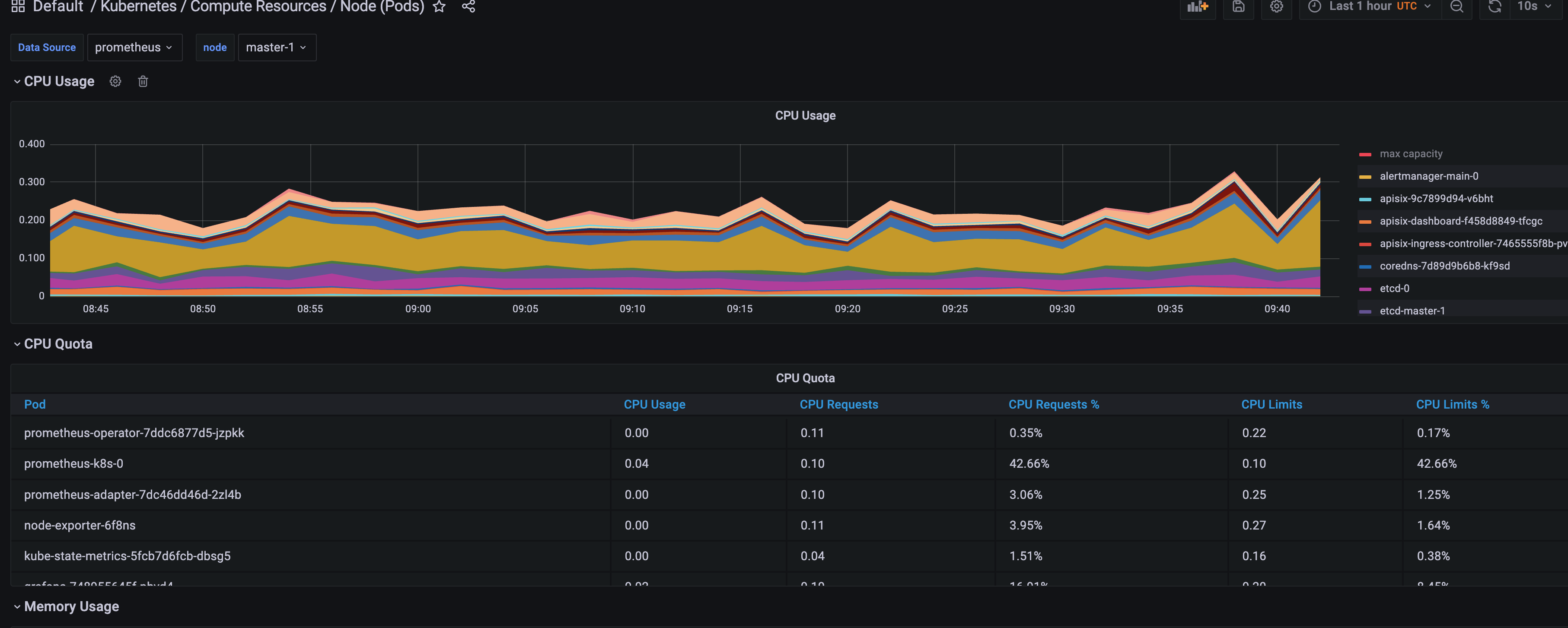

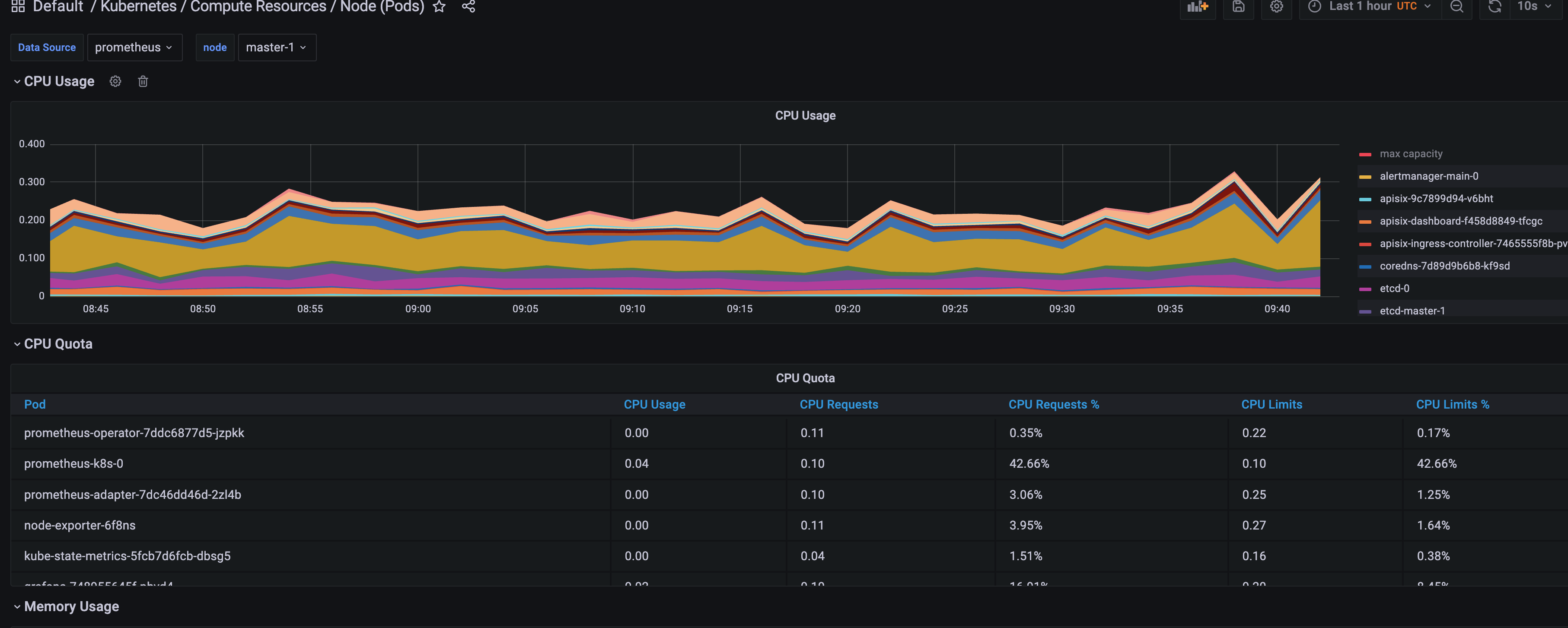

prometheus

alert

grafana