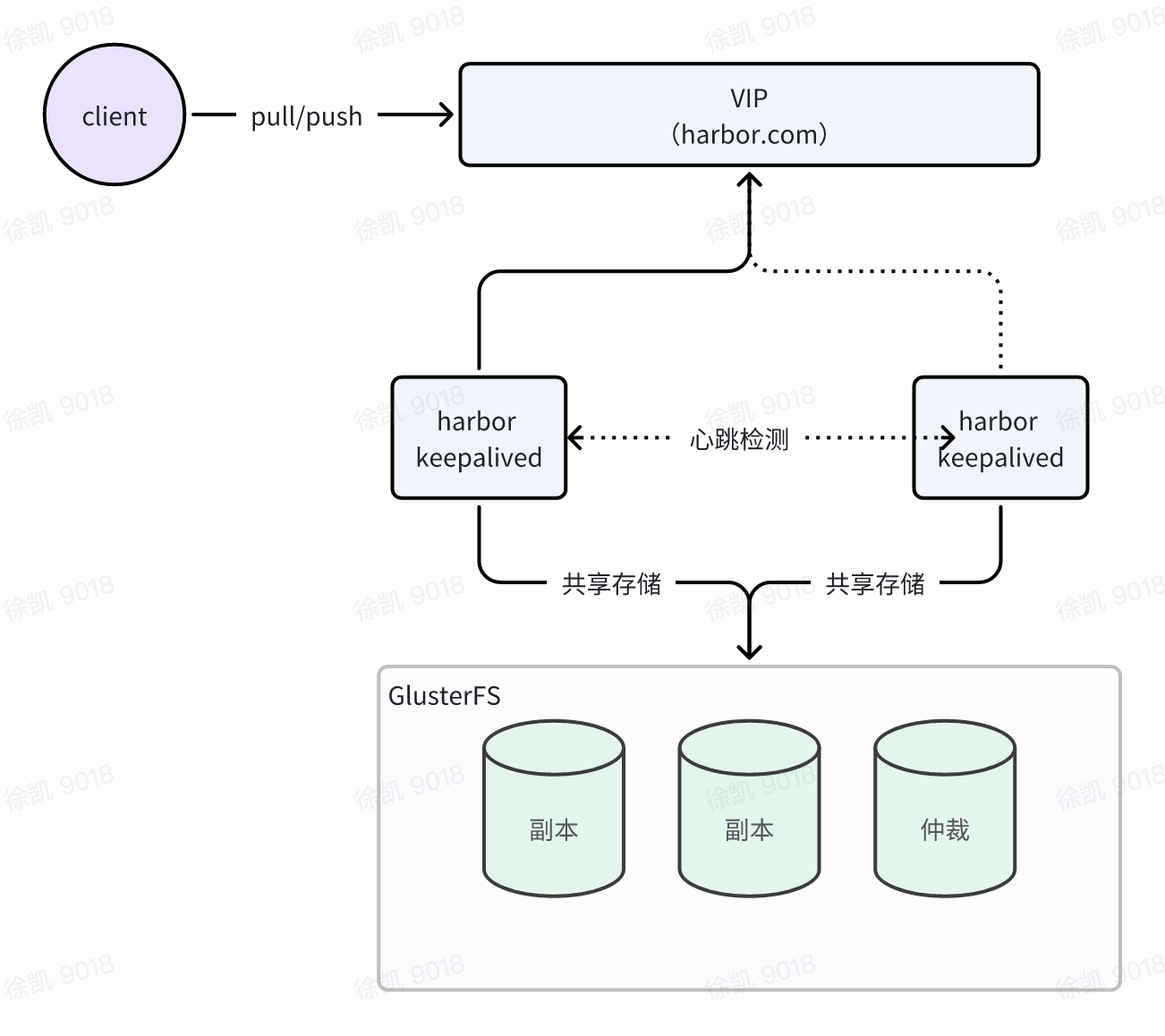

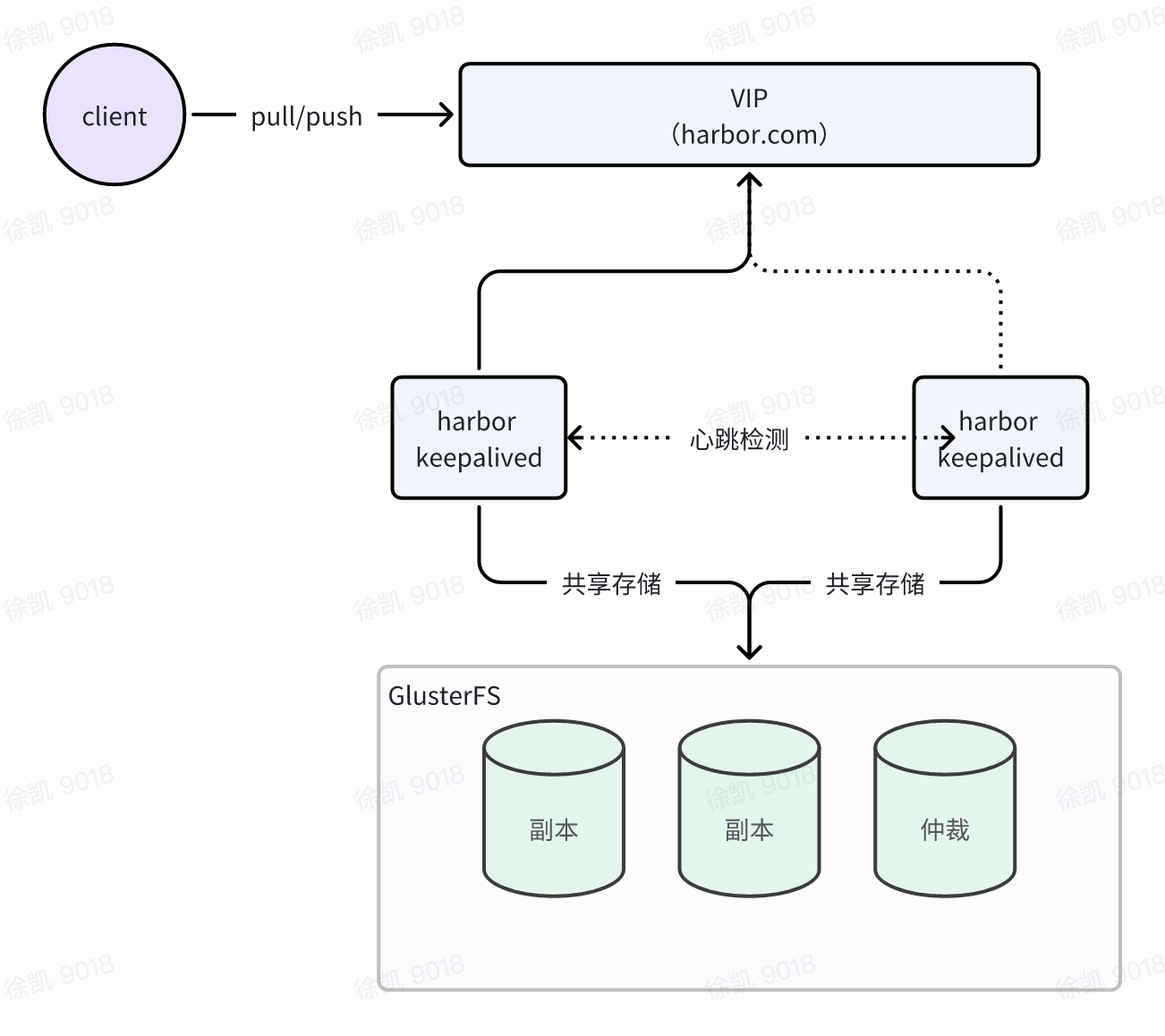

架构图

规划

| IP | 系统 | 配置 | 说明 |

|---|

| 10.1.1.10 | Debian12 | 2c4g 100g 500g | gluster存储节点,harbor主节点,keepalived |

| 10.1.1.11 | Debian12 | 2c4g 100g 500g | gluster存储节点,harbor从节点,keepalived |

| 10.1.1.12 | Debian12 | 2c4g 100g 500g | gluster存储节点 |

| 10.1.1.15(VIP) | | | |

部署

配置主机hosts

1

2

3

4

5

6

7

8

9

10

11

12

13

| # 修改机器名称

hostnamectl set-hostname gluster-node1

hostnamectl set-hostname gluster-node2

hostnamectl set-hostname gluster-node3

# /etc/hosts

10.1.1.10 gluster-node1

10.1.1.11 gluster-node2

10.1.1.12 gluster-node3

10.1.1.10 gluster-work

10.1.1.11 gluster-work

10.1.1.12 gluster-work

|

配置时间同步

修改时间为Asia/Shanghai

1

| timedatectl set-timezone "Asia/Shanghai"

|

检查时间是否同步

1

2

3

4

5

6

7

8

9

| # timedatectl

Local time: Wed 2025-01-15 15:23:21 CST

Universal time: Wed 2025-01-15 07:23:21 UTC

RTC time: Wed 2025-01-15 07:23:20

Time zone: Asia/Shanghai (CST, +0800)

System clock synchronized: yes

NTP service: active

RTC in local TZ: no

|

系统优化

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

| cat << EOF >> /etc/security/limits.conf

root soft nofile 655360

root hard nofile 655360

root soft nproc 655360

root hard nproc 655360

root soft core unlimited

root hard core unlimited

* soft nofile 655360

* hard nofile 655360

* soft nproc 655360

* hard nproc 655360

* soft core unlimited

* hard core unlimited

EOF

cat << EOF >> /etc/systemd/system.conf

DefaultLimitCORE=infinity

DefaultLimitNOFILE=655360

DefaultLimitNPROC=655360

EOF

|

磁盘格式化

分别在机器操作,格式化磁盘,配置开机挂载

1

2

3

| # /etc/fstab

UUID=570e9188-6483-4292-80ea-62a9c7ab543e /brick1 ext4 defaults 1 2

|

glusterfs

1

2

3

4

5

6

7

8

9

10

11

| wget -O - https://download.gluster.org/pub/gluster/glusterfs/11/rsa.pub > /etc/apt/trusted.gpg.d/glusterfs.asc

# glusterfs.list

echo "deb https://download.gluster.org/pub/gluster/glusterfs/11/11.1/Debian/12/amd64/apt bookworm main" > /etc/apt/sources.list.d/glusterfs.list

apt install glusterfs-server -y

# 启动服务

systemctl start glusterd

# 开机自启

systemctl enable glusterd

|

创建集群配置信任存储池

在node1节点执行

1

2

| gluster peer probe gluster-node2

gluster peer probe gluster-node3

|

查看集群状态

1

2

3

4

5

6

7

8

9

10

| # gluster peer status

Number of Peers: 2

Hostname: gluster-node2

Uuid: 294fc8bb-1b58-408e-aca5-39ffa6746c72

State: Peer in Cluster (Connected)

Hostname: gluster-node3

Uuid: d9654786-8911-458f-b751-c682678ff0e3

State: Peer in Cluster (Connected)

|

设置卷

分别在机器创建目录

在任意机器创建

1

2

3

4

| gluster volume create gv0 replica 3 arbiter 1 \

gluster-node1:/brick1/gv0 \

gluster-node2:/brick1/gv0 \

gluster-node3:/brick1/gv0

|

启动存储卷

1

| gluster volume start gv0

|

查看卷状态

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

| # gluster volume info gv0

Volume Name: gv0

Type: Replicate

Volume ID: 69d5b2c2-8031-4b5a-b1d5-df26c8592bab

Status: Started

Snapshot Count: 0

Number of Bricks: 1 x (2 + 1) = 3

Transport-type: tcp

Bricks:

Brick1: gluster-node1:/brick1/gv0

Brick2: gluster-node2:/brick1/gv0

Brick3: gluster-node3:/brick1/gv0 (arbiter)

Options Reconfigured:

cluster.granular-entry-heal: on

storage.fips-mode-rchecksum: on

transport.address-family: inet

nfs.disable: on

performance.client-io-threads: off

|

挂载使用

1

| mount -t glusterfs gluster-work:/gv0 /data

|

harbor

安装包和配置

离线安装包 2.11.2 Down

修改harbor.yml配置,持久化目录修改为glusterfs存储目录

1

2

| hostname: VIP

data_volume: /data/harbor_data_volume

|

安装

keepalived

安装

1

| apt install keepalived -y

|

配置

check_port.sh

1

2

3

4

5

6

7

8

9

10

11

12

13

| #!/bin/bash

TARGET_IP="127.0.0.1"

TARGET_PORT="5000"

# 使用 nc 检测端口是否可用

nc -z -w2 $TARGET_IP $TARGET_PORT

if [ $? -eq 0 ]; then

# 如果端口可用,返回 0

exit 0

else

# 如果端口不可用,返回 1

exit 1

fi

|

Master

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

| vrrp_script chk_5000 {

script "/etc/keepalived/check_port.sh"

interval 2

weight -20

}

vrrp_instance VI_1 {

state MASTER

interface ens192

virtual_router_id 51

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass xxxxxx

}

virtual_ipaddress {

10.1.1.15

}

track_script {

chk_5000

}

}

|

BACKUP

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

| vrrp_script chk_5000 {

script "/etc/keepalived/check_port.sh"

interval 2

weight -20

}

vrrp_instance VI_1 {

state BACKUP

interface ens192

virtual_router_id 51

priority 90

advert_int 1

authentication {

auth_type PASS

auth_pass xxxxxx

}

virtual_ipaddress {

10.1.1.15

}

track_script {

chk_5000

}

}

|

测试

手动关闭MASTER节点harbor,观察服务是否异常

master节点手动关闭harbor服务

观察vip是否漂移到backup节点

1

2

3

4

5

6

7

8

9

| 2: ens192: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000

link/ether 00:50:56:9d:d6:56 brd ff:ff:ff:ff:ff:ff

altname enp11s0

inet 10.1.1.11/24 brd 10.20.3.255 scope global ens192

valid_lft forever preferred_lft forever

inet 10.1.1.15/32 scope global ens192

valid_lft forever preferred_lft forever

inet6 fe80::250:56ff:fe9d:d656/64 scope link

valid_lft forever preferred_lft forever

|

访问harbor web正常,push pull 镜像正常

关闭单节点存储服务,观察服务是否异常

1

| systemctl stop glusterd

|

观察存储状态

1

2

3

4

5

6

7

8

9

10

| # gluster peer status

Number of Peers: 2

Hostname: gluster-node1

Uuid: 27b4fb21-b7a7-4935-849b-d9aca41b920d

State: Peer in Cluster (Disconnected)

Hostname: gluster-node3

Uuid: d9654786-8911-458f-b751-c682678ff0e3

State: Peer in Cluster (Connected)

|

访问harbor web正常,push pull 镜像正常

手动关闭机器,观察服务是否异常

master节点关闭,验证存储和harbor的高可用

观察vip是否漂移到backup节点

1

2

3

4

5

6

7

8

9

| 2: ens192: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000

link/ether 00:50:56:9d:d6:56 brd ff:ff:ff:ff:ff:ff

altname enp11s0

inet 10.1.1.11/24 brd 10.20.3.255 scope global ens192

valid_lft forever preferred_lft forever

inet 10.1.1.15/32 scope global ens192

valid_lft forever preferred_lft forever

inet6 fe80::250:56ff:fe9d:d656/64 scope link

valid_lft forever preferred_lft forever

|

访问harbor web正常,push pull 镜像正常

参考